Elizabeth Hall

I recently graduated UC Davis with a PhD in Vision Science, where I worked with Joy Geng in the Integrated Attention Lab. I previously worked with Chris Baker in the Laboratory of Brain and Cognition at the NIH and with Doug Davidson at the Basque Center for Cognition, Brain, and Language. I spent summer 2023 as a data science intern with the Alexa Economics & Measurement team at Amazon.

ehlhall1 @ gmail dot com google scholar twitter github CV

news

6/2025: Graduated and started work at Meta Reality Labs!

12/2023: New paper with Joy Geng on object attention and boundary extension!

8/2023: I was awarded the UC President's Dissertation Year Fellowship!

7/2022: New paper with Zoe Loh on working memory and fixation durations in scene-viewing!

9/2020: Work with Chris Baker and Wilma Bainbridge on encoding and recall of object / scenes in 7T fMRI is now out in Cerebral Cortex!

4/2020: I was awarded the National Defense Science and Engineering Graduate Fellowship to pursue work on visual attention in virtual reality.

preprints

Objects in focus: How object spatial probability underscores eye movement patterns

Objects in focus: How object spatial probability underscores eye movement patterns

Elizabeth H. Hall, Zoe Loh, John M. Henderson

PsyArXiv, 2024. Github

A paper documenting our process to segment 2.8k objects across 100 real-world scenes! We share our thoughts on the "best way" to segment objects, and analyses showing that image size and perspective has a big impact on the distribution of fixations. Full tutorial coming soon!

Eye gaze during route learning in a virtual task

Eye gaze during route learning in a virtual task

Martha Forloines*, Elizabeth H. Hall*, John M. Henderson, Joy J. Geng

*co-first authors

PsyArXiv, 2024.

We tracked eye movements while viewers studied an avatar navigating in Grand Theft Auto V. We trained a classifier to determine whether they learned the route in natural or scrambled order. Natural viewers preferred to attend to the path ahead, while scrambled viewers focused more on landmark buildings and signs.

publications

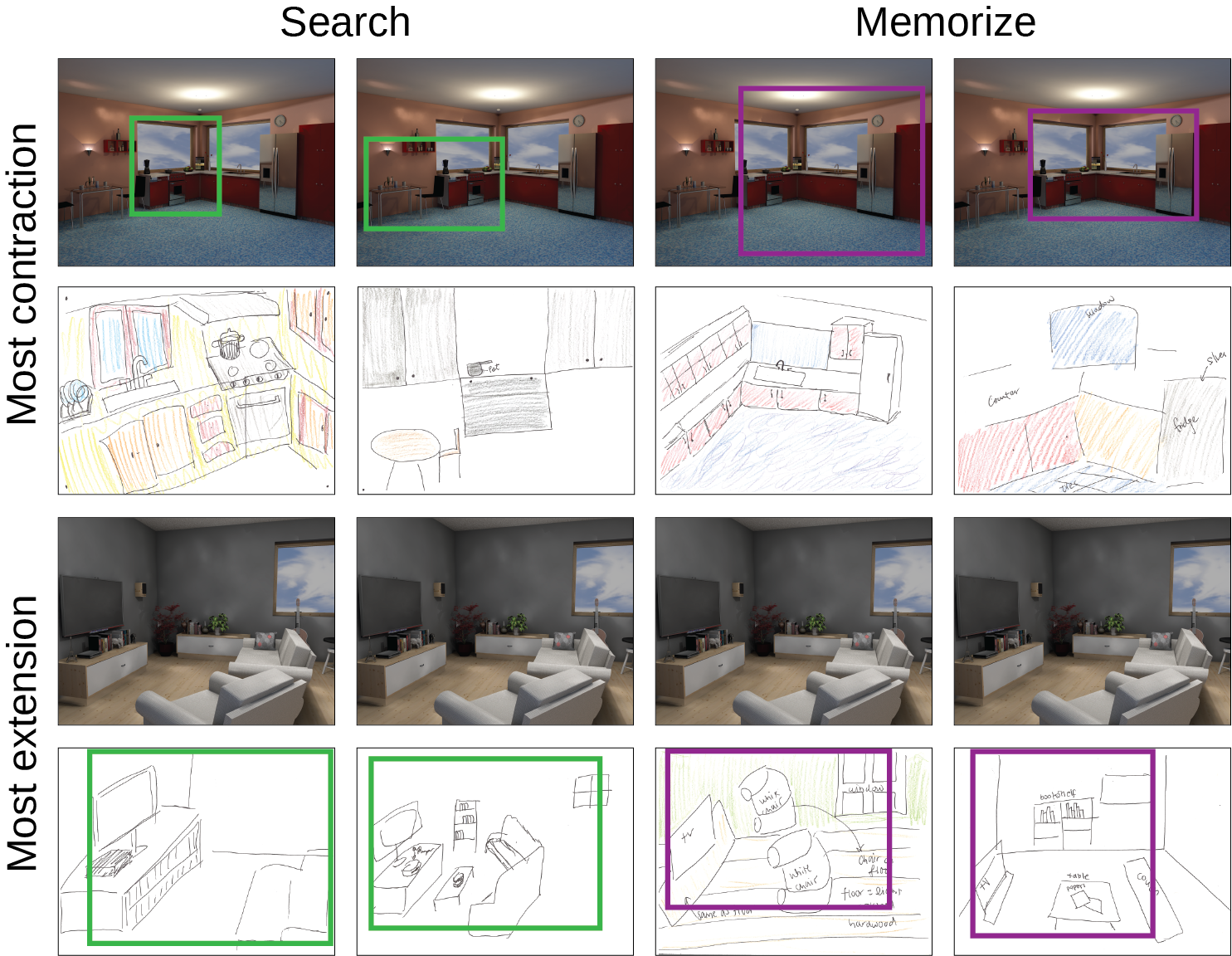

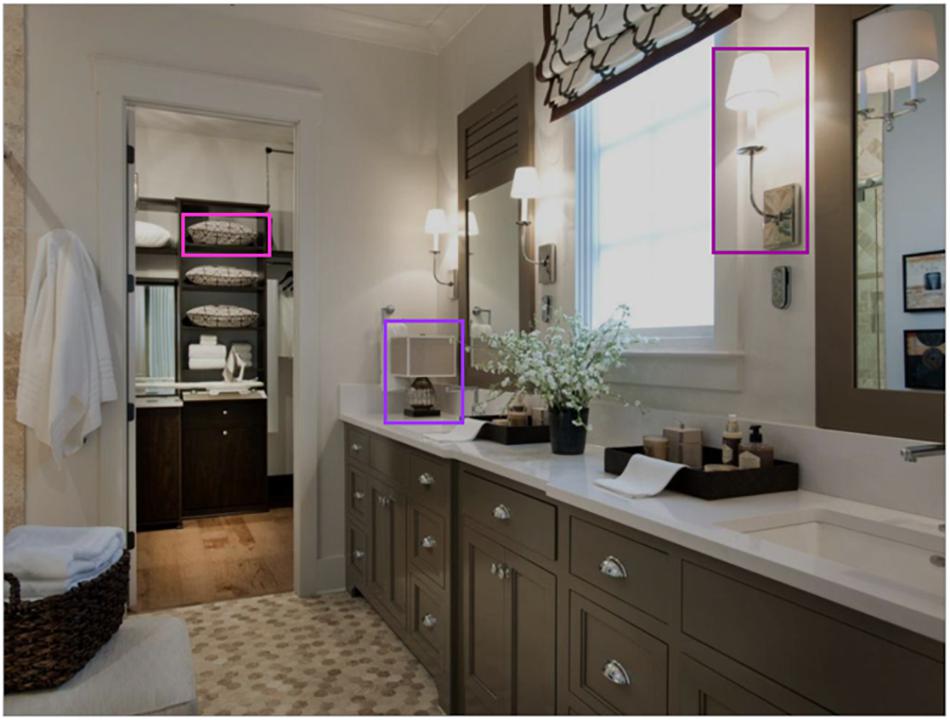

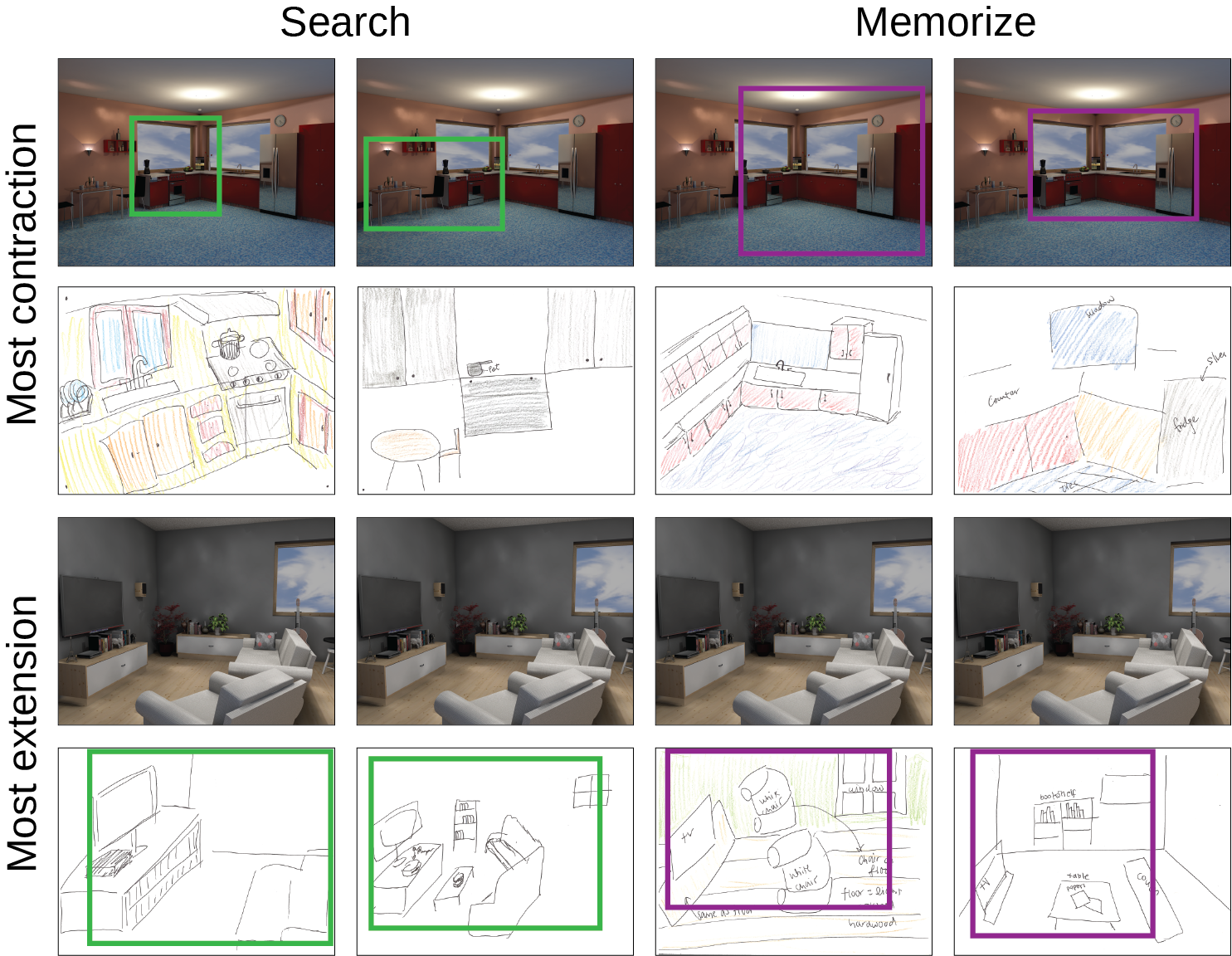

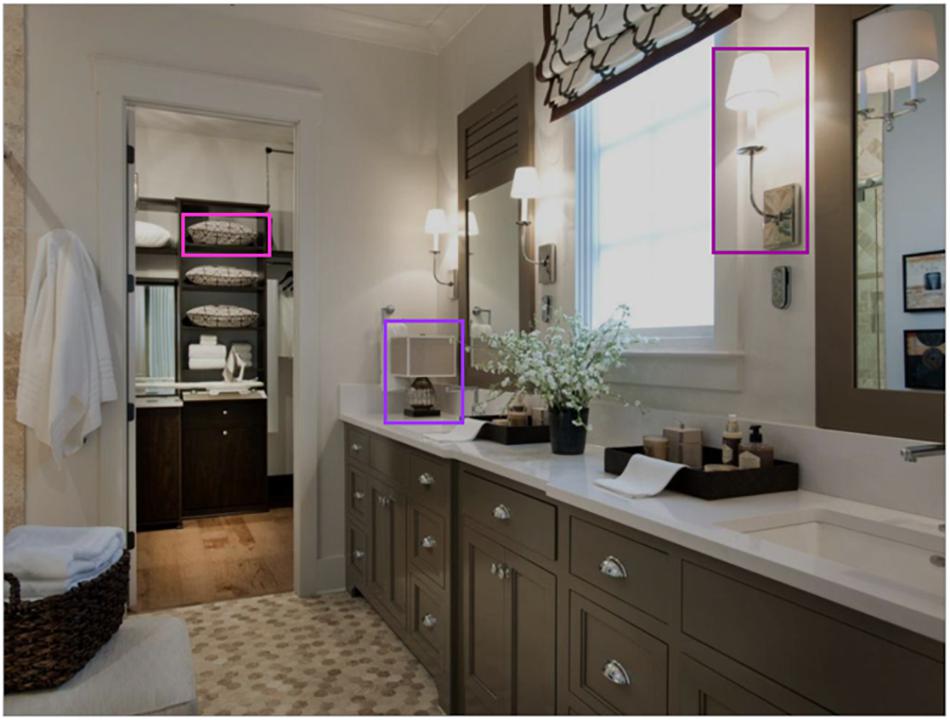

Object-based attention during scene perception elicits boundary contraction in memory

Object-based attention during scene perception elicits boundary contraction in memory

Elizabeth H. Hall, Joy J. Geng

Memory & Cognition, 2024. Code Data

We found that attending to small objects in scenes lead to significantly more boundary contraction in memory, even when other image properties were kept constant. This supports the idea that the extension/contraction in memory may reflect a bias towards an optimal viewing distance!

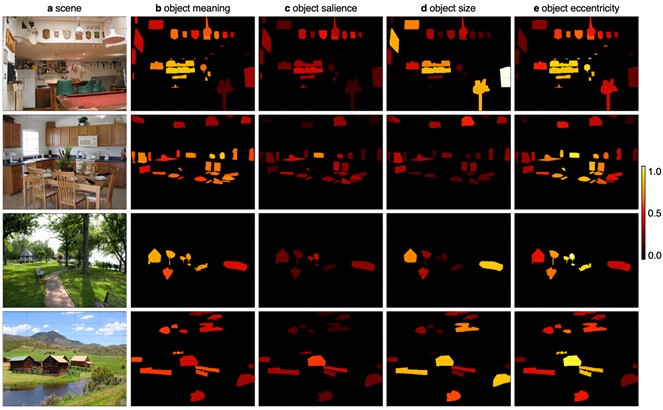

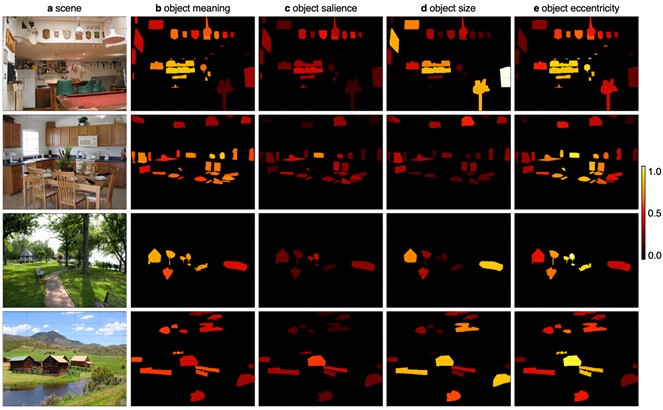

Objects are selected for attention based upon meaning during passive scene viewing

Objects are selected for attention based upon meaning during passive scene viewing

Candace Peacock*, Elizabeth H. Hall*, John M. Henderson

*co-first authors

Psychonomic Bulletin & Review, 2023. Preprint Stimuli

We looked at whether fixations were more likely to land on high-meaning objects in scenes. We found that fixations are more likely to be directed to high meaning objects than low meaning objects regardless of object salience.

An analysis-ready and quality controlled resource for pediatric brain white-matter research

An analysis-ready and quality controlled resource for pediatric brain white-matter research

Adam Richie-Halford, Matthew Cieslak, Fibr Community Science Consortium

Scientific Data, 2022.

An open-source dataset on brain white matter from 2700 New York city area children. I helped score the quality of diffusion MRI data, along with over 130 other community scientists.

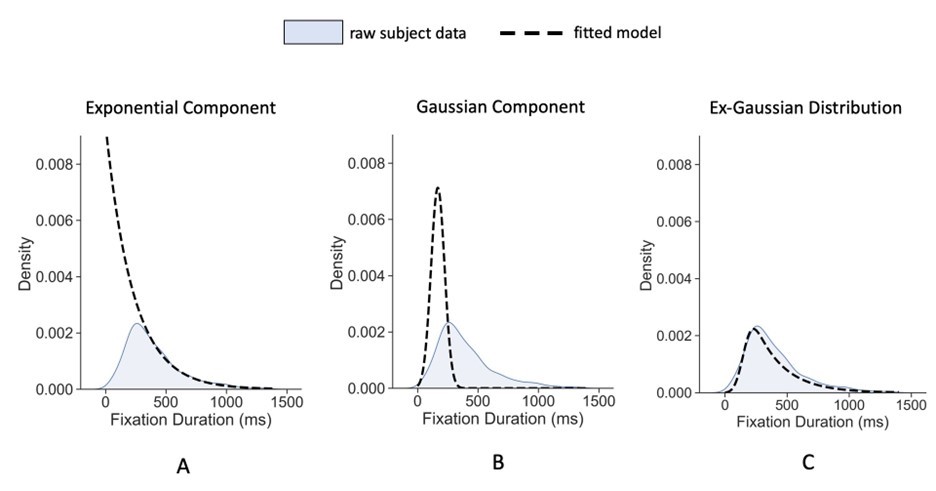

Working memory control predicts fixation duration in scene-viewing

Working memory control predicts fixation duration in scene-viewing

Zoe Loh*, Elizabeth H. Hall, Deborah A. Cronin, John M. Henderson

*undergrad supervised by me

Psychological Research, 2022.

We fit scene-viewing fixation data to a Ex-Guassian distribution to look at individual differences in memory. We found that the worse a participant's memory control was, the more likely they were to have some very long fixations when encoding scene detail into memory.

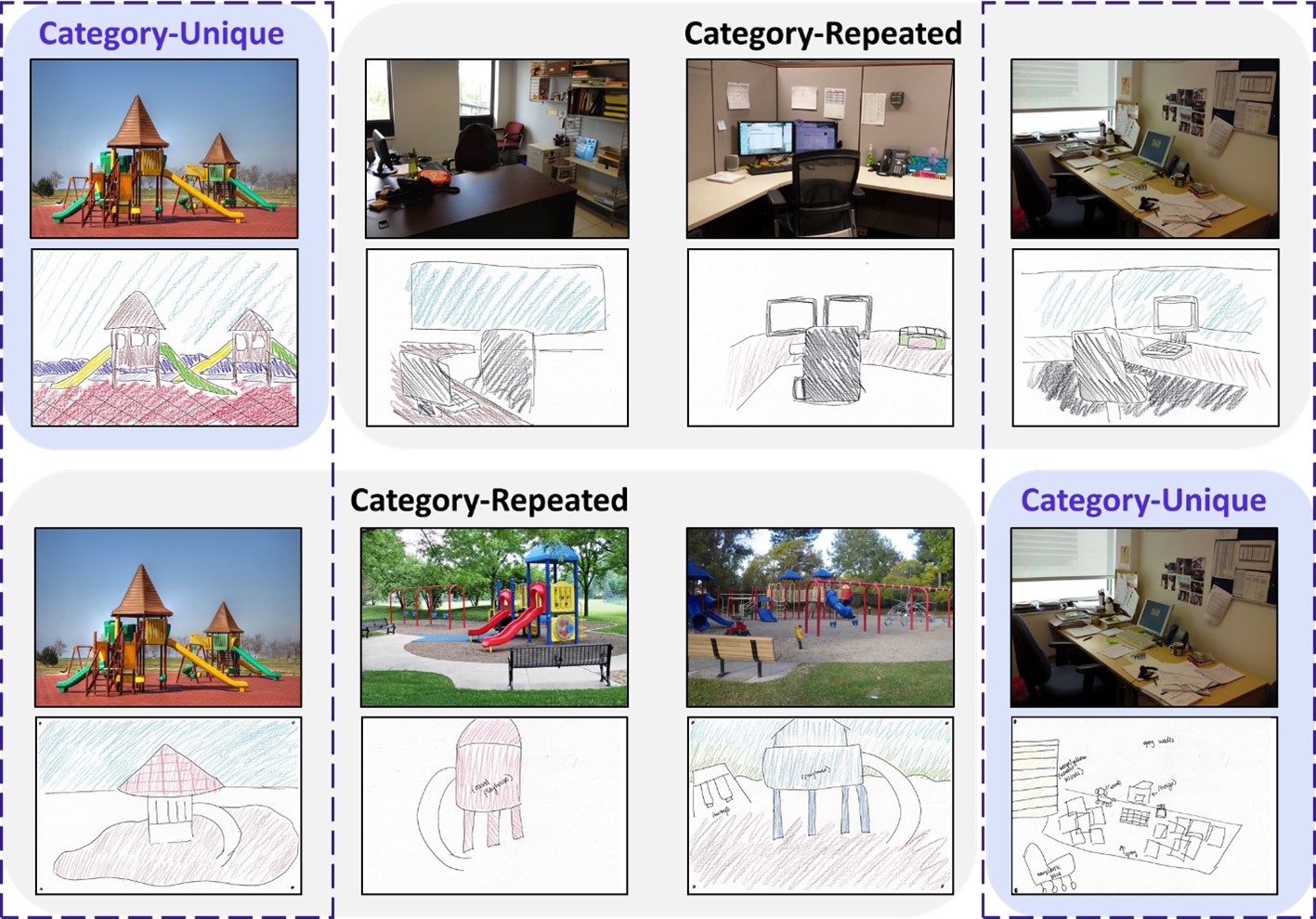

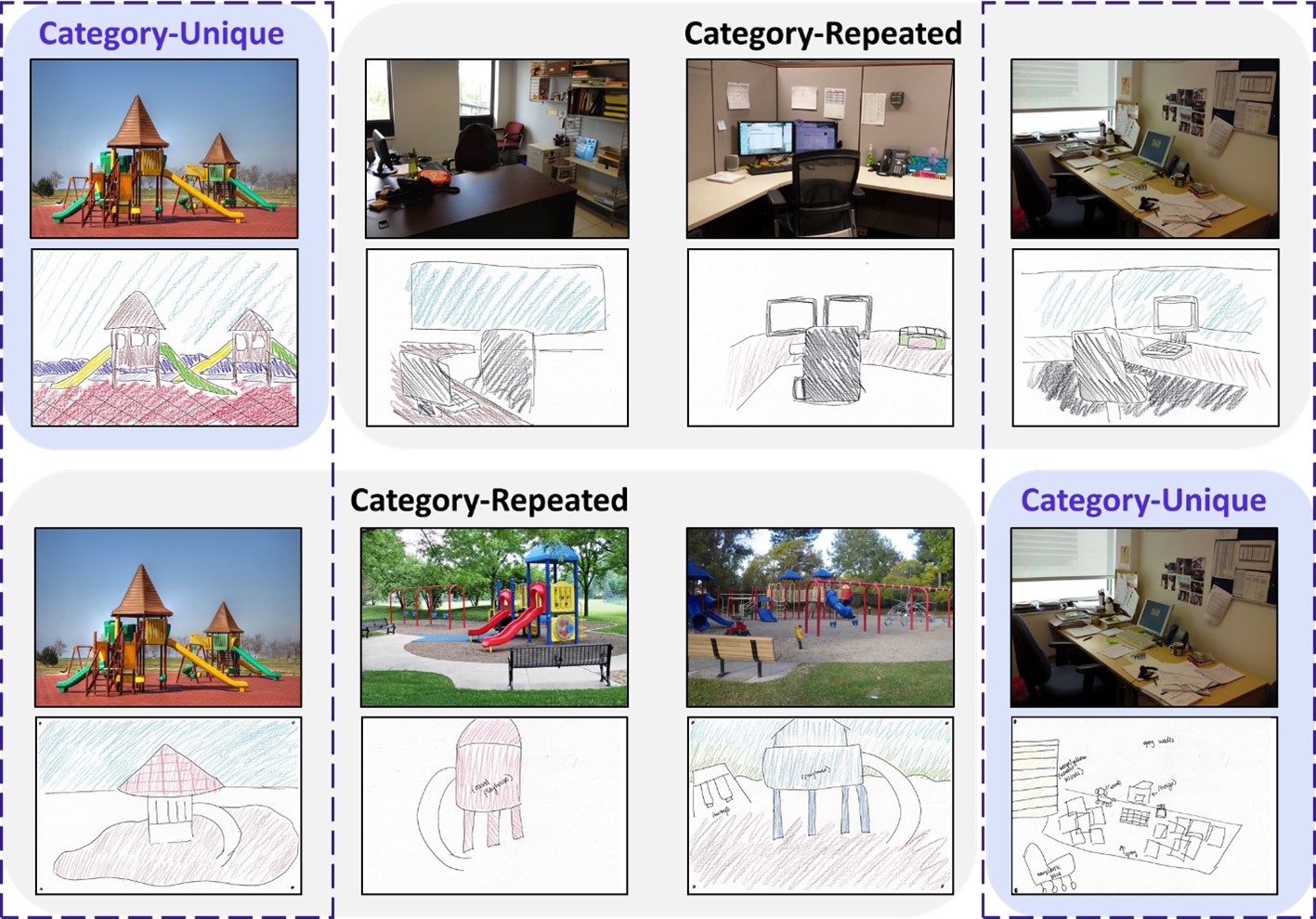

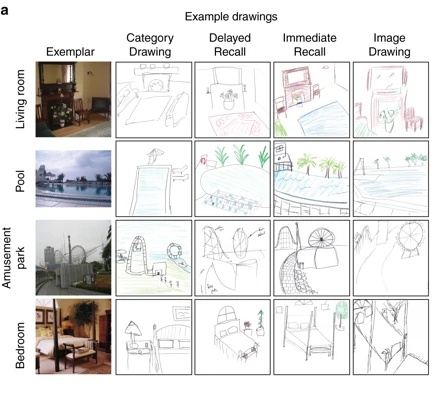

Highly similar and competing visual scenes lead to diminished object but not spatial detail in memory drawings

Highly similar and competing visual scenes lead to diminished object but not spatial detail in memory drawings

Elizabeth H. Hall, Wilma A. Bainbridge, Chris I. Baker

Memory, 2021. Preprint Data

We investigated the detail and errors participants can have in memory when having to recall multiple, similar scenes. We found that memory drawings of "competing" scenes have diminished object detail, but are surpisingly still fairly spatially accurate.

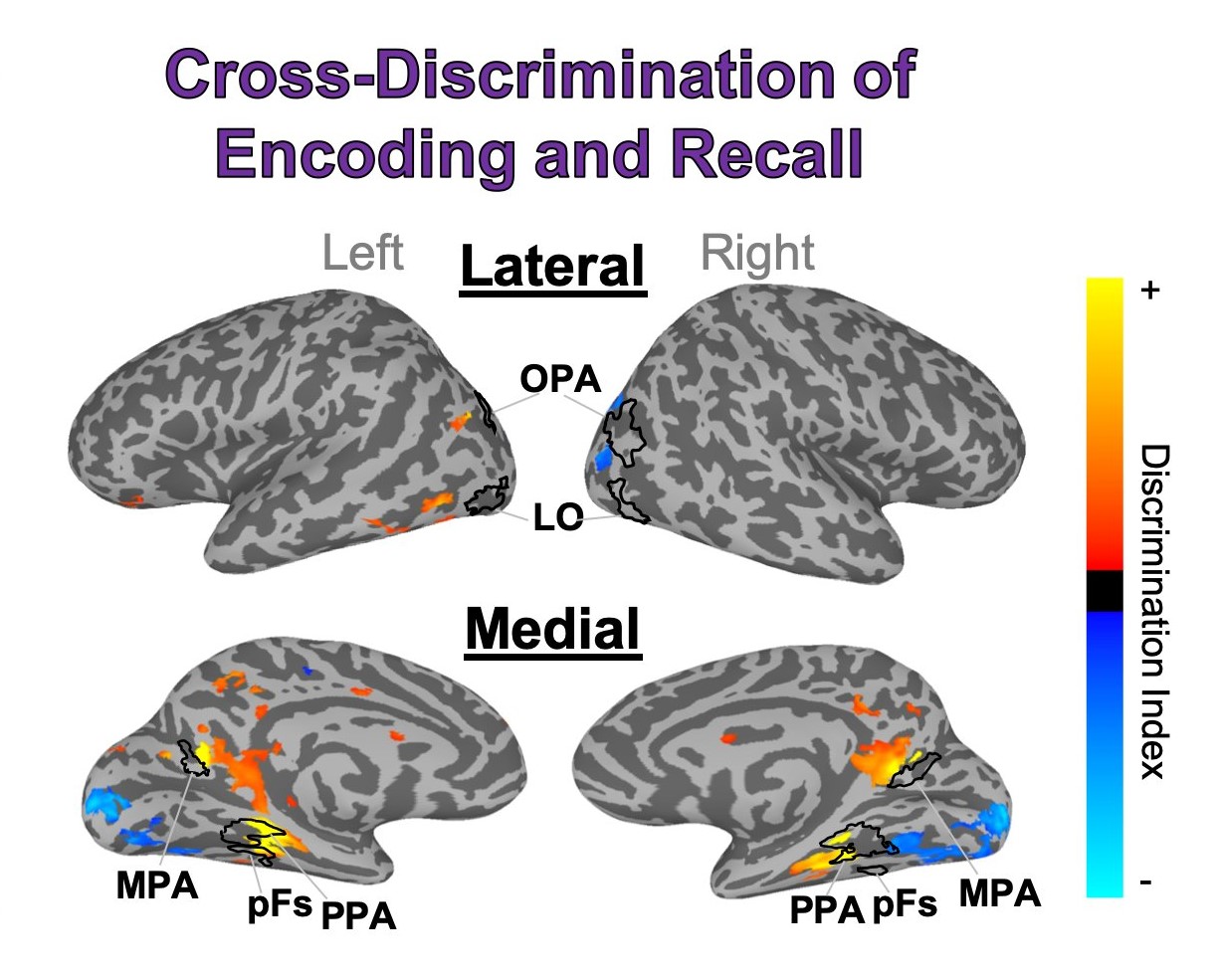

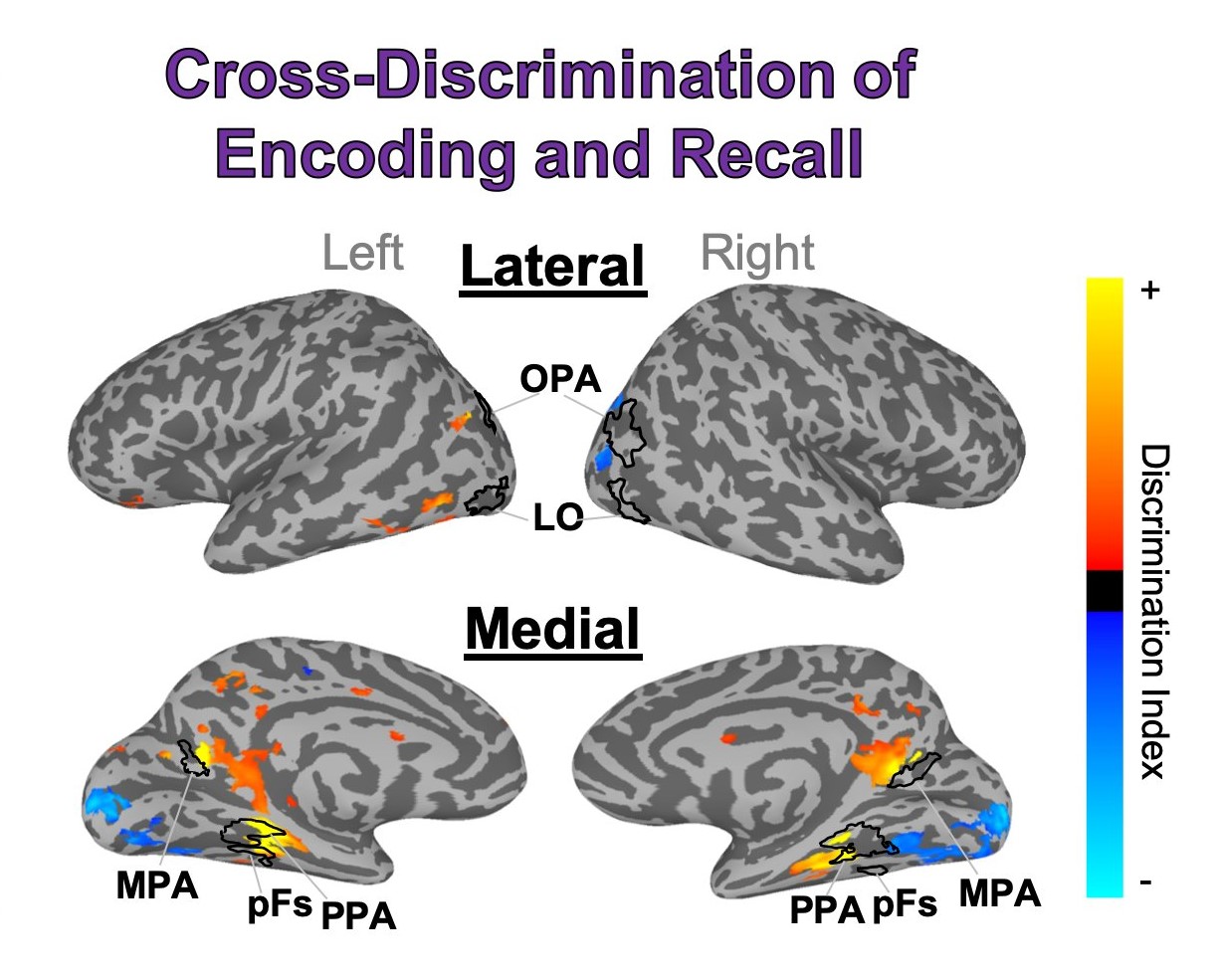

Distinct representational structure and localization for visual encoding and recall during visual imagery

Distinct representational structure and localization for visual encoding and recall during visual imagery

Wilma A. Bainbridge, Elizabeth H. Hall, Chris I. Baker

Cerebral Cortex, 2020.

We found that representations of memory content during recall show key differences from encoding in granularity of detail & spatial distribution. We also replicated the finding that brain regions involved in scene memory are interior to those involved in scene perception. See this article from Quanta for more on this idea!

Eye Movements in Real-World Scene Photographs: General Characteristics and Effects of Viewing Task

Eye Movements in Real-World Scene Photographs: General Characteristics and Effects of Viewing Task

Deborah Cronin, Elizabeth Hall, Jessica Goold, Taylor Hayes, John Henderson

Frontiers in Psychology, 2020.

We examined effects of viewing task on when and where the eyes move in real-world scenes during memorization and an aesthetic judgment tasks. Distribution-level analyses revealed significant task-driven differences in eye movement behavior.

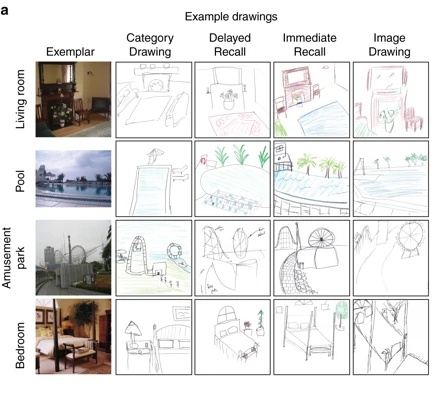

Drawings of real-world scenes during free recall reveal detailed object and spatial information in memory

Drawings of real-world scenes during free recall reveal detailed object and spatial information in memory

Wilma A. Bainbridge, Elizabeth H. Hall, Chris I. Baker

Nature Communications, 2019.

Data

Participants studied 30 scenes and drew as many images in as much detail as possible from memory. The resulting memory-based drawings were scored by thousands of online observers, revealing numerous objects, few memory intrusions, and precise spatial information. See this article from Scientific American for more!

Objects in focus: How object spatial probability underscores eye movement patterns

Objects in focus: How object spatial probability underscores eye movement patterns